Cloudflare outages have become increasingly prominent events in the digital landscape, disrupting widely used applications such as ChatGPT, X (formerly Twitter), and Spotify. These incidents expose the fragility of centralized web infrastructure and highlight the cascading effects when a critical internet layer fails.

For developers, IT managers, tech leaders, and even general users, it is incredibly important to have a clear understanding of how a Cloudflare outage occurs, why such an event has significant implications, and how to effectively mitigate similar risks moving forward. This knowledge is essential for maintaining seamless business continuity and preserving trust in today’s highly connected and interdependent digital world, where downtime can have far-reaching consequences.

This blog post thoroughly explores the technical root causes behind the recent Cloudflare outages, providing detailed case studies involving several major platforms to clearly illustrate the underlying issues. By examining these real-world examples, it aims to demystify the complex architecture responsible for these significant service disruptions.

Additionally, the post offers practical strategies and actionable insights designed to help organizations reduce their vulnerability to such single points of failure (SPOF) and mitigate systemic risks that are inherently present in centralized internet services and infrastructure.

Key Concepts and Frameworks: Understanding Cloudflare and Internet Infrastructure

Cloudflare is a leading global internet infrastructure provider that offers a wide and comprehensive range of essential services designed to enhance and optimize online performance, security, and reliability, including:

- A powerful Content Delivery Network (CDN)

- Robust Distributed Denial of Service (DDoS) protection

- An advanced Web Application Firewall (WAF)

- Reliable Domain Name System (DNS) management solutions

These critical services work together to secure, accelerate, and efficiently route web traffic, supporting millions of websites and applications worldwide, including prominent platforms such as ChatGPT, X (formerly Twitter), and Spotify.

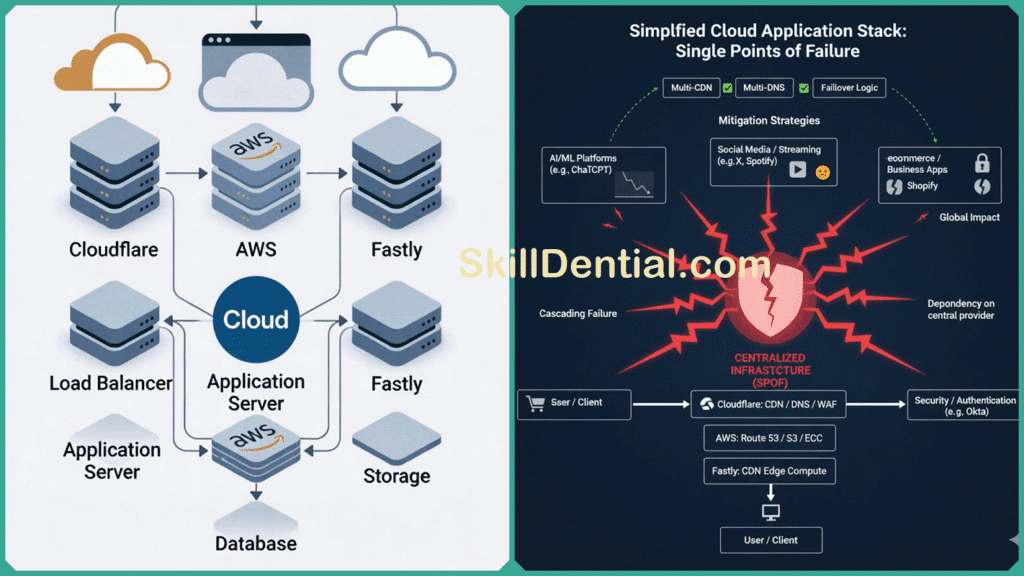

Because Cloudflare operates as an intermediary layer between end users and these various applications, any disruption or failure within its extensive network can have widespread consequences, potentially impacting thousands of digital services simultaneously. This central and pivotal role establishes Cloudflare as an indispensable yet potentially vulnerable cornerstone within the architecture of the modern Internet.

A Single Point of Failure (SPOF) in system design refers to any component whose failure completely halts the function of the overall system. When organizations rely exclusively on Cloudflare for CDN, DNS, or routing functionalities, Cloudflare represents a SPOF; if Cloudflare’s services encounter an outage, applications dependent solely on it can become unreachable regardless of their own internal health.

This Single Point of Failure (SPOF) risk becomes evident in real-world scenarios during outages when widely used services such as ChatGPT, X, Spotify, and many others suddenly become unreachable and inaccessible to users. These disruptions occur specifically because of issues with Cloudflare, which acts as a critical intermediary. Interestingly, even though these external services experience downtime for end users, their internal systems and backend operations often continue to function normally without interruption.

Systemic risk in the context of centralized internet infrastructure describes how the failure of one crucial entity (like Cloudflare) triggers a domino effect impacting multiple dependent systems worldwide. The modern internet’s infrastructure is surprisingly concentrated among a handful of providers such as Cloudflare, Amazon AWS, and Fastly.

High-profile outages in these providers reveal the fragility and systemic vulnerability of this centralized model. These incidents emphasize the need for organizations to consider redundancy and resilience strategies to mitigate cascading impacts and maintain business continuity.

Recent Cloudflare outage case studies (including November 18, 2025) show how software bugs, configuration errors, or automated internal processes can trigger massive service disruptions. For example, a misconfiguration led to cascading failures in Cloudflare’s load balancer health monitoring, which is responsible for directing global traffic.

This resulted in HTTP 500 server errors across many Cloudflare-dependent services worldwide, affecting millions of users trying to access platforms like ChatGPT, X, and Spotify. Importantly, these outages have repeatedly been confirmed not to stem from cyberattacks but from complex internal failures inherent in a highly complex infrastructure network.

Such case studies illustrate both the technical and business implications of SPOFs and systemic risks in internet services. They also clarify why relying heavily on a single infrastructure provider exposes organizations to significant reputational harm, lost revenues, and operational interruptions.

Consequently, adopting multi-CDN strategies, diversifying DNS providers, and implementing effective failover and graceful degradation mechanisms emerge as essential best practices to improve resilience and mitigate the fallout from similar outages.

In Summary

Cloudflare acts as a cornerstone internet infrastructure provider offering critical services like CDN, DNS, and WAF. Its vast network reach makes it both a powerful accelerator for web applications and a potential single point of failure.

The systemic risk inherent in centralized infrastructure means that failures at this level can cause wide-ranging outages across multiple sectors, highlighting the importance for businesses, developers, and IT leaders to plan for redundancy, diversify their infrastructure dependencies, and implement robust monitoring and mitigation strategies.

This deeper understanding empowers professionals to more effectively analyze and assess outage risks, allowing them to make well-informed decisions and justify critical investments in diversified infrastructure. By doing so, they can better ensure and maintain business continuity, especially when confronted with the growing complexity and interconnected nature of modern internet dependencies that impact organizations on multiple levels.

Case Studies of Cloudflare Outages

In November 2025, Cloudflare suffered a significant outage that impacted global access to major platforms, notably ChatGPT. This outage began around 11:20 UTC and was traced to a misconfiguration involving an automatically generated file that manages threat traffic. The file grew beyond operational limits, causing cascading failures within Cloudflare’s load balancer health monitoring system.

Load balancers are crucial for directing user traffic across multiple healthy servers to maintain performance and availability. The failure in this system meant Cloudflare could not accurately determine which servers were healthy or how to route traffic, leading to widespread HTTP 500 internal server errors and the activation of protective measures that blocked legitimate user requests.

Even though ChatGPT’s backend systems continued to operate without interruption, the collapse in traffic routing at Cloudflare caused the service to become completely inaccessible to users across the globe. This issue manifested itself through error messages that prompted users to “unblock Cloudflare domains,” effectively preventing anyone from reaching the platform despite the backend still functioning smoothly.

Other high-profile platforms, including X (formerly known as Twitter), Spotify, Uber, and Canva, encountered similar widespread disruptions during this period. Users on these services experienced intermittent outages, frequent internal server errors, and service interruptions that lasted for several hours at a stretch.

Downdetector, a popular outage tracking website, showed significant spikes with thousands of error reports being logged simultaneously, clearly illustrating the extensive breadth and scale of the impact. The outage didn’t spare Cloudflare’s own infrastructure either; its API and dashboard services were affected, which severely limited the company’s ability to swiftly manage and remediate the incident in real time.

This particular situation contributed significantly to both the increased complexity and the extended duration of the disruption. It introduced additional challenges that made resolving the issue more complicated and prolonged the overall time required to restore normal operations.

Recurring Patterns of Failure: The Systemic Risk

A closer technical examination revealed that this outage was due to internal system degradation rather than external factors such as hardware failure or cyberattacks. Cloudflare engineers confirmed the root cause was a latent software bug exacerbated by automated file growth and routine maintenance activities. This complexity exemplifies the challenge of managing intricate cloud infrastructure with many interconnected components that can propagate errors system-wide.

Looking beyond November, previous 2025 Cloudflare outages further confirm the patterns of vulnerability in massive cloud networks. For example, the June 12, 2025, outage affected several Cloudflare services, including Workers KV, WARP, and Workers AI.

Failures in Worker KV and dependencies caused elevated error rates (503 and 500 HTTP responses), preventing client connections and dashboard logins. Video streaming services and image processing saw near-total failure at peak times, and automated traffic management failed to reroute all CDN requests successfully.

These incidents clearly highlight that a wide variety of different Cloudflare subsystems support and underpin many of the platform’s most critical and essential functions, meaning that any internal disruptions can quickly and directly translate into significant and far-reaching downstream effects that impact numerous services and users.

Another notable 2025 incident occurred on September 12, 2025, when a faulty dashboard update resulted in the Cloudflare control plane effectively self-DDoSing. A dashboard bug flooded the Tenant Service API with redundant calls, causing a cascade failure in control-plane APIs and dashboards, although the core CDN network remained unaffected.

This clearly demonstrated how even relatively simple coding errors within management layers have the potential to generate significantly outsized impacts, resulting in widespread disruption that affects a large number of customers.

These case studies reinforce key lessons about the systemic risks of heavily centralized service providers like Cloudflare. They show how single points of failure in load balancers, threat management, or control plane software can cascade into widespread outages affecting millions of end users and critical applications globally.

Businesses and IT architects must recognize the operational consequences of these failures—lost user trust, revenue disruption, and damaged reputations—and accordingly invest in resilience strategies like multi-CDN deployments, multi-DNS configurations, failover mechanisms, and robust incident monitoring.

In Summary

Cloudflare outages in 2025—ranging from the highly disruptive November incident that significantly impacted popular platforms such as ChatGPT and X, to various earlier failures throughout the year—serve as clear examples of the complex and multifaceted risks inherent in modern cloud infrastructure.

These technical breakdowns stem primarily from internal software bugs and misconfigurations that result in the disabling of essential routing and security functions, rather than being caused by external cyberattacks or malicious interference.

The widespread ripple effects experienced across numerous well-known internet services highlight the critical importance of systemic preparedness and the adoption of architectural diversification strategies to ensure the sustained reliability and resilience of digital services in an increasingly interconnected and dependent global online environment.

Current Trends and Developments in Cloud Resilience

The recent series of high-profile Cloudflare outages has significantly accelerated awareness and propelled the adoption of more advanced and sophisticated strategies designed to enhance resilience and ensure higher availability across a wide range of internet services. These disruptions have brought to light the critical importance of robust infrastructure planning.

As a result, several key trends are currently shaping the way IT architects and business leaders approach the challenge of mitigating systemic risks that arise from relying heavily on centralized infrastructure providers. These trends emphasize diversification, redundancy, and proactive risk management to protect business continuity.

Increasing Adoption of Multi-CDN Strategies

A prominent lesson from Cloudflare’s outages is the criticality of avoiding dependence on a single CDN provider. Single-CDN architectures create Single Points of Failure (SPOF) that can lead to total site or service unavailability during provider-level disruptions.

Multi-CDN strategies mitigate this risk by distributing web traffic across multiple CDN providers, creating redundancy that enables automatic failover if one provider experiences issues. Beyond resilience, multi-CDN also improves overall site performance by intelligently routing traffic through the fastest or least congested network regionally.

Organizations leverage multi-CDN services that provide real-time traffic management, enabling seamless switching between CDN vendors without user impact. This approach is becoming best practice for mission-critical applications and high-traffic websites.

Diversification of DNS Providers

DNS is another crucial dependency layer vulnerable to SPOF, as DNS resolution failures block user access even if applications themselves are healthy. Forward-thinking organizations are implementing multi-DNS or multi-provider configurations that dynamically redirect DNS queries to backup providers during outages.

Techniques include DNS load balancing and automatic failover to alternative DNS servers, which help maintain domain resolution continuity. This DNS diversification alongside multi-CDN architectures adds critical resilience layers, creating multiple redundant access paths to online services and minimizing downtime caused by infrastructure failures.

Enhanced Monitoring and Graceful Failure Engineering

Sophisticated monitoring techniques also play a vital role in managing cloud infrastructure risks. Digital Experience Monitoring (DEM) tools provide detailed insights into end-user experience, alerting teams to anomalies often before customers notice disruptions. Layered alerting systems escalate incidents efficiently across operations teams, enabling rapid response.

Furthermore, application designers are embracing the principle of graceful degradation—allowing applications to maintain partial functionality, fallback modes, or read-only states when upstream networks or APIs fail.

By designing applications anticipating these failure modes, businesses preserve user engagement and operational continuity even amid infrastructure outages. This combination of proactive monitoring and graceful failure design is essential to building robust, user-centric internet services in today’s complex network environment.

Together, these evolving trends clearly exemplify a significant and emerging paradigm shift towards a more distributed and resilient internet architecture. Forward-thinking businesses that strategically invest in multi-CDN deployments, DNS diversification, comprehensive real-time monitoring, and failure-aware application design are effectively positioning themselves to better withstand systemic risks inherent in the internet’s infrastructure.

By adopting these advanced approaches, companies can significantly reduce downtime, ensure continuous service availability, and ultimately protect and maintain user trust in an increasingly interconnected and complex digital world that demands high reliability and robustness.

Actionable Strategies for IT and Business Professionals to Mitigate Cloudflare Outage Risks

Understanding and effectively addressing the various vulnerabilities revealed by Cloudflare outages requires careful, deliberate planning combined with strategic technical investment. The following actionable strategies offer a comprehensive and robust framework designed specifically for IT teams and business leaders who are aiming to significantly enhance their systems’ resilience and ensure seamless business continuity:

- Map Your Dependency Graph: Start by thoroughly identifying all external service dependencies your applications rely upon that could pose Single Points of Failure (SPOF). This includes all CDN providers, DNS services, APIs, authentication systems, and monitoring tools. Map their relationships and criticality to your operations. This comprehensive dependency graph enables informed risk assessment and prioritization of mitigation efforts.

- Implement Multi-CDN Solutions: Adopt a multi-CDN architecture to distribute your web traffic across multiple Content Delivery Network providers. Use intelligent, real-time traffic routing mechanisms to direct users to the optimal CDN based on latency, availability, or geographic location. This reduces reliance on a single provider like Cloudflare and allows seamless failover in case one CDN experiences outages. Multi-CDN strategies have proven effective in minimizing downtime and maintaining performance during provider disruptions.

- Diversify DNS Providers with Automatic Failover: Employ at least two reliable DNS providers configured for failover. If the primary DNS service fails or becomes unreachable, your DNS queries automatically resolve via the secondary provider, ensuring continuity in domain name resolution. Implement DNS load balancing and dynamic failover mechanisms to further enhance resilience and reduce downtime caused by DNS provider outages.

- Use Load Balancers with Health Checks: Configure load balancers equipped with real-time health checks that continuously monitor the status of backend servers and network nodes. When unhealthy or unresponsive nodes are detected, traffic is automatically rerouted to healthy nodes or alternate providers, preventing users from experiencing failures. This proactive routing adjustment is critical to maintaining uptime and performance even during partial infrastructure failures.

- Design Application-Level Fallbacks and Graceful Degradation: Incorporate graceful degradation principles into your application design. This means planning fallback modes, such as read-only operation, cached content delivery, or reduced functionality, enabling users to continue engaging with your services during upstream failures. Transparent error handling and user notifications help maintain trust and reduce user frustration in case of connectivity issues.

- Invest in Comprehensive Monitoring and Alerting: Use Digital Experience Monitoring (DEM), synthetic transaction monitoring, and layered alerting systems that provide deep insights into user experience and infrastructure health. Early detection of anomalies enables rapid incident response, limiting outage duration and impact. Alert escalation workflows ensure the right teams are mobilized efficiently to troubleshoot and mitigate emerging issues before they affect a broad user base.

Together, these carefully designed strategies create a robust and multi-layered defense system that effectively addresses and mitigates the systemic risks emphasized by Cloudflare and other similar large-scale outages.

By adopting and implementing these best practices, IT and business professionals can significantly enhance system uptime, protect crucial revenue streams, and maintain strong user confidence, even in the face of an inherently complex, dynamic, and highly interconnected cloud ecosystem that constantly presents new challenges and vulnerabilities.

Why Tech Leaders and Business Owners Should Care About Cloudflare Outages

High-profile outages like the Cloudflare incident in November 2025 carry significant reputational and financial consequences for affected businesses. ChatGPT’s downtime, for instance, disrupted productivity for millions of users globally, spotlighting how critical internet services have become to daily operations across industries.

When outages occur frequently or last for extended periods, they erode user trust and customer loyalty—intangibles that are often more valuable and harder to regain than direct revenue losses. For tech leaders and business owners, protecting these assets must be a strategic priority to sustain long-term success.

Quantifying and understanding the business risks tied to infrastructure outages is essential for informed decision-making. Downtime translates into lost sales, operational delays, increased support costs, and even potential damage to brand credibility.

These cumulative impacts can be enormous, especially for digital-first enterprises relying heavily on cloud services and online platforms. Leaders must weigh these risks when evaluating investments in infrastructure resilience and disaster recovery planning to safeguard their company’s competitive position.

While centralized infrastructure providers like Cloudflare, AWS, and Fastly offer attractive benefits such as scalability, robust security, and simplified management, they inherently represent systemic risk. The Cloudflare outage, as well as similar past incidents at AWS and Fastly, are cautionary examples showing how a single provider’s failure can cascade across industries and geographies, affecting millions of end users.

Business continuity depends not only on choosing high-quality providers but also on diversifying dependencies to avoid single points of failure in critical infrastructure layers. Embracing multi-CDN architectures, multi-DNS setups, and layered monitoring systems are tangible ways businesses mitigate these risks.

Forward-thinking tech leaders understand that infrastructure resilience is no longer optional but a competitive differentiator in the digital economy. Managing systemic risks through technological diversification and operational preparedness reduces downtime, protects revenue streams, and preserves stakeholder confidence in an interconnected, cloud-dependent world.

In Summary

Tech leaders and business owners should care deeply about Cloudflare outages because these incidents significantly amplify systemic vulnerabilities that have the potential to disrupt critical business operations, cause considerable damage to a company’s reputation, and severely jeopardize the trust and confidence customers place in their services.

In today’s interconnected digital environment, investing strategically in resilient infrastructure and comprehensive risk mitigation measures is absolutely essential for organizations aiming to navigate the increasing complexities of modern cloud ecosystems both safely and successfully over the long term.

FAQs

What causes Cloudflare outages?

Cloudflare outages typically stem from internal software bugs, misconfigurations, or automated processes that unintentionally escalate errors within load balancing, DNS routing, or security subsystems. Unlike common misconceptions, these outages are rarely caused by cyberattacks or hardware failures. For example, the November 18, 2025, outage was linked to a misconfiguration and a software race condition in network routing and load balancer health checks.

Other causes seen in 2025 include storage system write failures, dashboard bugs causing self-DDoS effects, and configuration errors affecting public DNS services. Human error and capacity overloads also contribute significantly to outages in complex cloud environments.

How does a Cloudflare outage affect applications like ChatGPT?

Applications using Cloudflare depend on its network for secure and efficient traffic routing. When Cloudflare encounters routing or load balancing failures, these applications become unreachable to users, even if their internal backend systems are fully operational.

During the 2025 outage, ChatGPT’s backend remained unaffected, but users could not access the service due to Cloudflare’s inability to route traffic, resulting in HTTP 500 errors and blocking security measures that erroneously flagged legitimate traffic.

Can multi-CDN strategies prevent Cloudflare-like outages?

While multi-CDN strategies cannot guarantee immunity from all outages, they significantly reduce dependency on a single provider. By distributing traffic across multiple CDN vendors with intelligent routing and automatic failover, businesses can ensure availability even if one CDN experiences disruptions. Multi-CDN setups mitigate the risk of total service unavailability caused by single-provider faults and improve overall performance by choosing the best provider regionally.

What is a single point of failure (SPOF) in internet infrastructure?

A SPOF is a critical system component whose failure causes the entire system to stop functioning. In internet infrastructure, SPOFs commonly arise when services rely exclusively on one CDN, one DNS provider, or a centralized service like Cloudflare. If this component fails, it creates a bottleneck and widespread service outages, as observed during Cloudflare’s outages that impacted multiple major platforms worldwide.

How can businesses prepare for systemic internet service provider outages?

Businesses can prepare by diversifying infrastructure providers, implementing multi-CDN and multi-DNS architectures with automatic failover, and using load balancers equipped with health checks for real-time traffic rerouting.

Designing applications with graceful degradation—such as fallback modes and error transparency—helps maintain user engagement during outages. Comprehensive monitoring through Digital Experience Monitoring (DEM) and synthetic transactions allows early detection and faster incident response, all of which build robust resilience against systemic provider failures.

In Conclusion

Cloudflare outages are a potent reminder of the inherent risks in centralized cloud infrastructure. The November 2025 incident disrupting ChatGPT, X, and Spotify revealed how a fault in Cloudflare’s load balancer monitoring logic could cascade into widespread service interruptions.

This event, combined with previous significant AWS and Fastly outages, highlights the critical and urgent necessity for implementing comprehensive resilience strategies. These strategies include adopting multi-CDN solutions, diversifying DNS providers, and establishing robust, continuous monitoring systems to ensure higher availability and reliability. Such measures are essential to mitigate risks and minimize the impact of future service disruptions.

For developers, IT managers, and business leaders alike, the case studies provide clear and actionable guidance: carefully map out all your dependencies, prioritize diversification strategies, and thoroughly prepare for various failure scenarios.

By taking these proactive steps, organizations can significantly enhance their overall system reliability while simultaneously safeguarding their reputation and protecting critical revenue streams in an increasingly interconnected and fast-paced world.

Discover more from SkillDential

Subscribe to get the latest posts sent to your email.