When we train machine learning models, we expect them to find patterns. But if the training data is flawed, the AI doesn’t just learn—it amplifies prejudice. AI training data biases happen when datasets are unrepresentative or skewed, leading to outputs that are unfair at best and dangerous at worst.

Whether it’s selection bias in your sampling or measurement bias in your collection, these flaws can derail even the most advanced systems. In this guide, we break down the 9 most common biases you need to recognize to ensure your AI remains accurate, ethical, and compliant.

What Are AI Training Data Biases?

At its core, AI training data bias occurs when the information used to “teach” a machine learning model does not accurately reflect the environment in which that model will operate.

Think of a model like a student: if every textbook they read contains the same factual error, the student will treat that error as an absolute truth. In the AI world, these “errors” are systematic flaws in datasets that cause models to produce discriminatory, skewed, or outright erroneous predictions.

Where do these biases come from?

These biases aren’t usually intentional; rather, they are “baked in” during the development lifecycle:

- Historical Prejudices: Data that reflects past societal inequities (e.g., historical lending data that favored specific demographics).

- Poor Sampling: Collecting data from a group that is too small or too specific to represent the general population.

- Technical Flaws: Errors in how data is measured, labeled, or weighted by human annotators.

Why it Matters in 2026

For tech leaders and practitioners, bias is no longer just an “ethics” problem—it is a performance and legal problem. A biased model leads to:

- Flawed Market Insights: Making expensive business decisions based on “hallucinated” trends.

- Regulatory Penalties: Violating strict transparency laws like the EU AI Act.

- Brand Erosion: Facing public backlash when an automated system exhibits clear prejudice.

Why Bias is a Business Liability in 2026

In today’s market, unchecked biases turn AI from a strategic asset into a significant liability. When your training data is flawed, your ROI doesn’t just stagnate—it actively erodes.

The Cost of Inaccuracy

Research indicates that biased models can inflate inaccurate predictions by up to 30% within specific demographics. For a business, this means miscalculating credit risk, missing out on high-value customer segments, or stocking inventory based on skewed demand signals. These “invisible” errors lead to wasted capital and missed opportunities.

Legal Exposure and Governance

Compliance is no longer a “nice-to-have.” Frameworks like the NIST AI Risk Management Framework (RMF) and the EU AI Act have set the standard for 2026.

- Product Managers now face direct legal exposure if their automated systems are found to be discriminatory in high-stakes areas like hiring, lending, or healthcare.

- Failure to comply can result in massive fines and mandatory “model decommissioning”—essentially deleting your expensive AI and starting over.

The Startup “Redeployment Trap”

For startup founders, ignoring bias early is a technical debt nightmare. Auditing vendor tools and internal datasets before deployment is the only way to avoid costly redeployments. Fixing a biased model after it has been integrated into your product is often 5x more expensive than catching the bias during the training phase.

The Bottom Line: Trust is capital. 72% of S&P 500 companies now disclose AI-related risks to their investors because they know that one biased “hallucination” can cause permanent brand erosion and loss of customer loyalty.

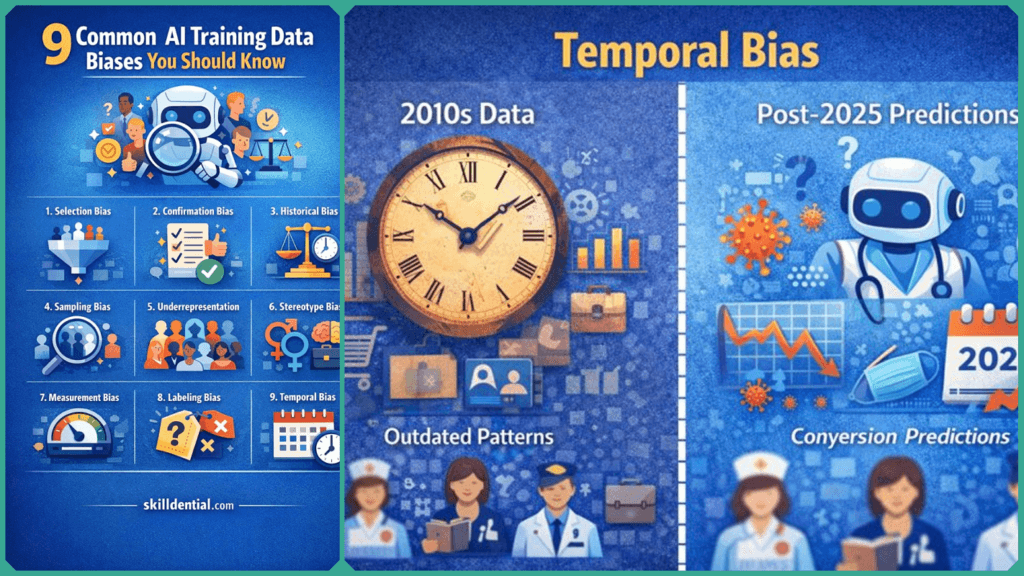

The 9 Common AI Training Data Biases

Use the following breakdown as a checklist for your next data audit. Each bias is paired with its specific business impact and a practical step for mitigation.

| Bias Type | Definition | Example Impact | Mitigation Step |

| Selection Bias | Non-representative sampling that excludes specific groups. | Hiring AI overlooks qualified minority candidates. | Use stratified random sampling to ensure balance. |

| Measurement Bias | Faulty sensors, poor lighting, or incorrect labels distort data. | Facial recognition fails on diverse skin tones (up to 35% higher error rates). | Calibrate tools across a wide variety of conditions. |

| Prejudice Bias | Direct reflection of societal stereotypes within the data. | Search results default to male doctors and female nurses. | Hire diverse annotation teams to catch tropes. |

| Exclusion Bias | Systematically omitting key subpopulations during collection. | Financial models ignore low-income users, losing market share. | Conduct rigorous inclusion audits. |

| Sampling Bias | Uneven data collection across different times or locations. | Urban-focused transit models fail in rural infrastructure. | Leverage multi-source datasets for geographic variety. |

| Temporal Bias | Using outdated data that ignores recent societal or economic shifts. | Pre-2020 economic models fail to predict post-pandemic shifts. | Implement regular data refreshes and drift detection. |

| Confirmation Bias | Labelers or developers reinforce their own preconceptions. | Overvaluing majority patterns while ignoring “outlier” successes. | Use blind labeling protocols to hide sensitive variables. |

| Stereotyping Bias | Embedding cultural tropes into the model’s “worldview.” | AI image generators default to gendered career norms. | Run automated bias detection scans on generated outputs. |

| Implicit Bias | Subtle, subconscious prejudices of the developers. | Choosing “convenient” data that mirrors the developer’s background. | Mandatory cross-audit reviews by external teams. |

Why “Measurement Bias” is a 2026 Priority

It’s worth noting that Measurement Bias has become a focal point for regulators this year. In 2026, the focus has shifted from just who is in the data to how the data was captured. For example, health AI models often show higher error rates for Black patients (sometimes by nearly 20%) not just because of sampling, but because pulse oximetry hardware was originally calibrated on lighter skin—a classic measurement bias.

How to use this list:

Don’t try to solve all nine at once. Start by auditing your Selection and Historical biases, as these usually account for the majority of “high-risk” errors in modern AI applications.

A Closer Look: How Selection Bias Sabotages AI

Selection bias doesn’t happen because of “bad” data, but because of incomplete data. It arises when the datasets used to train a model overrepresent certain groups while neglecting others, usually due to non-random or “convenient” collection methods.

When the input is skewed, the model’s “worldview” becomes narrow. It learns to be highly accurate for the majority group but remains functionally “blind” to everyone else.

The Real-World Impact: Facial Recognition

A classic and sobering example is found in facial recognition technology. Historically, many of these tools were trained on datasets that were predominantly composed of lighter skin tones.

- The Consequence: As shown in major studies (such as the Gender Shades project), these models had error rates as high as 34% for darker-skinned women, compared to less than 1% for lighter-skinned men.

- The Business Risk: For a tech leader, deploying this means your product is effectively “broken” for a significant portion of the global market, leading to immediate accusations of discrimination and potential hardware recalls.

How Practitioners Fix It

Mitigating selection bias requires moving from “passive” collection to active curation:

- Stratified Sampling: Instead of just grabbing the “easiest” data available, practitioners deliberately group data into subgroups (strata) to ensure each demographic is represented proportionally.

- Diverse Sourcing: If your data comes only from one geographic region or one time of day, you must seek out “counter-data” to fill the gaps.

- Inclusion Benchmarks: Setting a hard requirement that no subgroup can have an error rate higher than a certain percentage (e.g., within 2% of the majority group).

Pro-Tip for Leaders: Always ask your data team: “Who is missing from this dataset?” It is often the data you don’t have that causes the most damage.

What Causes Measurement Bias?

Measurement bias occurs when the tools or methods used to capture data are inconsistent, imprecise, or unrepresentative of the environment where the AI will eventually live. It’s a “technical distortion” that happens at the very moment of data creation.

The “Lab vs. Reality” Problem

Think of a model trained on crystal-clear, high-definition images taken in a controlled studio. When that same model is deployed in the “real world”—where cameras might be lower resolution, lighting is poor, or sensors are dusty—it fails. The data used to train the model was “clean,” but it didn’t account for the “noise” of reality.

Critical Case: Medical Imaging

A significant and dangerous example of measurement bias is found in medical AI.

- The Cause: If a diagnostic AI is trained on medical images from one specific type of high-end scanner used in a wealthy hospital, it may fail to recognize the same disease in images taken by different hardware in a rural clinic.

- The Impact: This can lead to misdiagnosis or delayed treatment for patients in underserved areas. In some audits, these technical discrepancies have been shown to account for a massive drop in diagnostic accuracy when moving between hospital systems.

Strategic Mitigation for Leaders

To protect your product from measurement bias, you must enforce “Stress Testing” in your development lifecycle:

- Standardized Collection Protocols: Ensure data is gathered across a wide variety of devices, environments, and conditions (e.g., testing facial recognition in both bright sun and low-light rain).

- Hardware-Agnostic Training: If you are building software, ensure it is trained on data from multiple hardware vendors to prevent “vendor lock-in” bias.

- Audit Results: By implementing standardized protocols and diverse testing, leaders have seen diagnostic and operational errors reduced by up to 25% during independent audits.

We’ve covered how data is collected (Selection) and how it is measured (Measurement). But what happens when the data is “perfect,” but the world it reflects is unfair?

Case Study: Insights from Skilldential Career Audits

This data from Skilldential career audits provides a powerful, evidence-based transition from the “Why it Matters” section to the “Case Study” or “Real-World Evidence” portion. It grounds the abstract concept of Selection Bias in the high-stakes reality of the 2026 job market.

Recent findings from Skilldential career audits highlight just how critical active bias mitigation is for both job seekers and hiring platforms. Their data reveals that bias isn’t just a technical glitch—it has a direct impact on career trajectories and company compliance.

The Problem: The “Resume Gap”

Skilldential observed that many aspiring practitioners struggle to identify subtle selection biases in their own datasets and applications.

- The Impact: This failure resulted in a staggering 40% missed interview opportunity rate.

- The Cause: When AI screening tools are trained on non-diverse historical data, they often “filter out” qualified candidates who don’t fit a narrow, biased profile (e.g., specific zip codes, non-traditional education paths, or naming conventions).

The Solution: Diverse Dataset Audits

By implementing rigorous, diverse dataset audits, Skilldential saw a dramatic shift in model performance.

- Fairness Improvement: Fairness scores for recruitment models improved by 35%.

- Strategic Outcome: Ensuring that the training data accurately reflected a global, diverse talent pool didn’t just make the AI “nicer”—it made it significantly more accurate at identifying top-tier talent.

The Business Result: Risk Reduction

For the Tech-Savvy Leaders reading this, the most compelling takeaway from the Skilldential report is the impact on governance.

- Halved Compliance Risk: Leaders who underwent specialized AI bias training reported that their compliance risks were cut in half.

- 2026 Context: In an era of the EU AI Act and strict NIST guidelines, halving your risk through proactive auditing isn’t just good ethics—it’s essential for protecting your bottom line.

How to Spot and Fix These Biases

Recognizing that your data is biased is only the first step. In 2026, “knowing” is insufficient—regulators and customers expect you to have a proactive strategy for detection and repair.

To transform your AI from a liability into an asset, you need a workflow that combines technical metrics with human oversight.

Spotting the Invisible: Fairness Metrics

You cannot fix what you cannot measure. Modern practitioners use specific Fairness Metrics to audit their models. One of the most critical is Demographic Parity.

- How it works: This metric checks if your model delivers a “positive outcome” (like a job offer or a loan approval) at the same rate across different sensitive groups (race, gender, etc.), regardless of the individual’s qualifications.

- The Tooling: Frameworks like the NIST AI Risk Management Framework provide standardized tools and “disaggregated evaluations” to help you calculate these disparities. If your selection rate for one group is less than 80% of the rate for another (the “four-fifths rule”), you have a clear indicator of bias.

Fixing the Flaws: Data & Algorithmic Mitigation

Once a bias is spotted, you have three primary “levers” to pull:

- Data Augmentation: If your model fails on a specific group because it lacks data (Selection Bias), you can use Synthetic Data Generation or GANs (Generative Adversarial Networks) to create realistic, diverse training samples. This “fills the gaps” without requiring months of new data collection.

- Debiasing Algorithms: You can implement In-processing techniques, such as adding a “fairness penalty” to your model’s loss function. This essentially tells the AI: “I want you to be accurate, but I will penalize you if you achieve that accuracy by being unfair.”

- Iterative Testing: Bias mitigation is not a “one-and-done” task. Models must be tested iteratively against “hold-out” datasets that specifically represent the demographics most at risk of bias.

The Ethical Advocate’s Role: Transparency Reports

For those focused on the societal impact, the goal is Transparency Reporting.

- The Purpose: These reports (now often mandatory under the EU AI Act) demystify the “black box.” They document where your data came from, which biases were identified, and exactly what steps were taken to mitigate them.

- The Business Benefit: Transparency builds radical trust. According to 2025/2026 CX trends, 75% of businesses believe that a lack of transparency leads to increased customer churn. By publishing a transparency report, you aren’t admitting weakness—you are demonstrating maturity and ethical leadership.

Common AI Training Data Biases FAQs

To wrap up our guide, here are the most frequently asked questions regarding AI training data biases and how they are handled in 2026.

What is selection bias in AI training data?

Selection bias occurs when the dataset used for training does not accurately represent the target population due to flawed or non-random sampling.

- The Risk: The model becomes highly accurate for the “majority” group in the data, but fails significantly for underrepresented groups.

- 2026 Example: A skincare analysis AI trained exclusively on images from high-end clinics may fail to recognize conditions in patients from rural or lower-income areas because the training data lacked diverse environmental and demographic representation.

How does prejudice bias affect AI models?

Prejudice bias (or Association Bias) embeds societal stereotypes and historical prejudices directly into an AI’s predictions.

- The Impact: The AI learns to reinforce “traditional” norms rather than objective reality. For instance, an image generator might consistently depict “CEOs” as men and “Assistants” as women because that is the pattern it saw most often in its historical training data.

- Mitigation: This requires diverse annotation teams to audit labels and the use of “counter-stereotypical” data to rebalance the model’s understanding.

What causes temporal bias?

Temporal bias arises when a model is trained on data that was accurate at one point in time but has since become outdated due to societal, economic, or technological shifts.

- The Problem: Models trained on pre-pandemic consumer data (pre-2020) or pre-2026 market trends will fail to predict current behaviors.

- The Fix: Implement “Model Drift” monitoring and schedule regular data refreshes to ensure the AI’s “knowledge” evolves with the world.

Can measurement bias be fixed?

Yes. Measurement bias is often a technical issue that can be solved through standardization.

- The Solution: By ensuring that data is collected using consistent protocols and hardware, you remove the “noise” that distorts a model’s vision.

- Business Value: Leaders who implement standardized sensor calibration and diverse environment testing (e.g., testing at different light levels or temperatures) have seen diagnostic and operational errors drop by up to 25%.

Why is implicit bias hard to detect?

Implicit bias is difficult to spot because it is “invisible”—it stems from the subconscious prejudices of the humans who collect, label, and curate the data.

- The Challenge: Unlike statistical errors, implicit bias hides in subtle patterns, such as a labeler subtly rating resumes from certain universities higher without realizing it.

- Detection Strategy: 2026 standards suggest using Statistical Audits (like Demographic Parity checks) and Cross-Audit Reviews, where external teams verify the fairness of the labeling process.

In Conclusion: Turning Awareness into Action

Understanding the 9 common AI training data biases is no longer just a technical exercise—it is a cornerstone of modern business strategy. Whether it is Selection Bias skewing your customer insights or Measurement Bias distorting your operational efficiency, these flaws directly impact your bottom line and your brand’s integrity.

Key Takeaways

- The Root Cause: Biases aren’t just “bugs”; they are systematic distortions—statistical, systemic, or human—that stem from how we collect and interpret data.

- The Framework: Leveraging global standards like the NIST AI Risk Management Framework is the most effective way to categorize and address these risks.

- The Business Impact: Proactive bias mitigation reduces compliance risk, prevents costly model redeployments, and ensures your AI delivers equitable performance across all demographics.

Your Next Step: Audit Before You Deploy

Don’t wait for a “hallucination” or a regulatory audit to reveal the flaws in your system. Start building a culture of transparency and accuracy today.

Download the AI Audit Checklist: Use this step-by-step guide to identify “danger zones” in your current tools, interview third-party AI vendors, and ensure your training data meets 2026 fairness standards.

Mitigate your risks today to build a more accurate, fair, and profitable future.

- Don’t Start a YouTube Channel with AI Until You Read This - January 29, 2026

- 9 Best Cisco Networking Academy Courses for Beginners - January 29, 2026

- 9 Common AI Training Data Biases You Should Know - January 29, 2026

Discover more from SkillDential | Your Path to High-Level AI Career Skills

Subscribe to get the latest posts sent to your email.