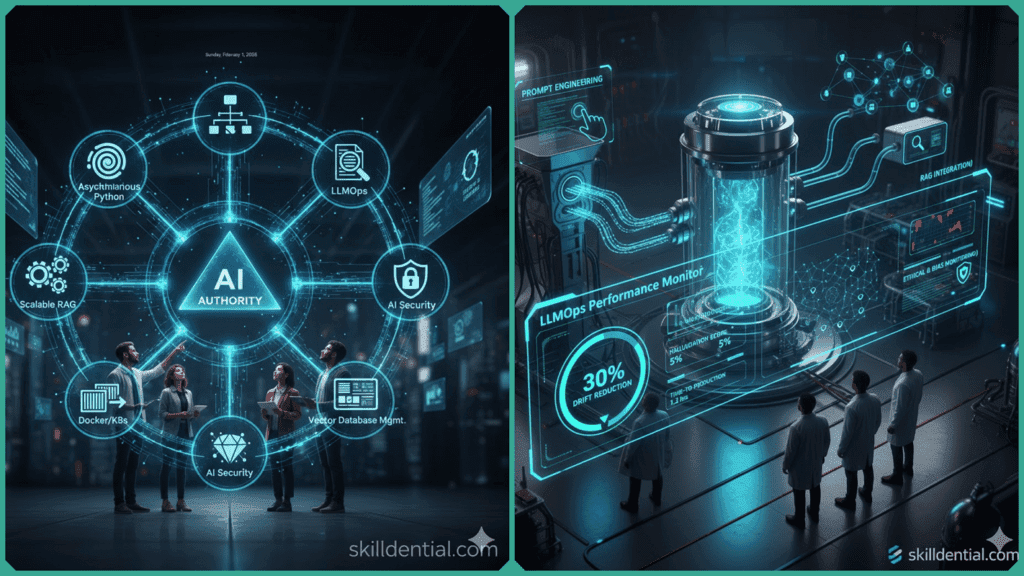

Software skills encompass the programming, system design, and deployment practices essential for building robust AI systems that live beyond the experimental notebook. True authority in this field requires more than just model training; it demands proficiency in asynchronous processing, frameworks for scalable pipelines, and the operations tools that ensure production reliability.

Mastering these disciplines elevates a developer from simply implementing features to architecting the secure, efficient AI infrastructures required for real-world scale.

“To bridge the gap between building a demo and leading a department, here are the top 9 software skills that transform raw AI talent into architectural authority.”

Why Focus on These Software Skills?

These skills bridge the gap from prototyping AI models to deploying enterprise-grade systems that scale and secure probabilistic computations. Rising software engineers and transitioning data scientists gain authority by orchestrating “Software 2.0”—a paradigm where code manages data flows and model behaviors rather than deterministic logic.

In Skilldential career audits, we observed that while transitioning data scientists often struggle with production deployment, implementing LLMOps and robust software engineering practices resulted in 40% faster time-to-production.

Skill 1: Asynchronous Python for High-Throughput AI

Asynchronous Python uses libraries like asyncio and aiohttp to handle concurrent I/O operations, enabling AI pipelines to process streams without blocking. This software skill powers real-time inference servers managing thousands of requests per second. It grants authority by preventing bottlenecks in high-load environments and significantly reducing compute costs through efficient resource utilization.

Skill 2: LLMOps for Continuous Model Optimization

LLMOps extends MLOps to large language models, focusing on prompt versioning, fine-tuning, and RAG integration. Unlike traditional MLOps, it emphasizes embedding management and ethical monitoring to adapt to evolving data. Professionals wielding this software skill lead teams by ensuring models remain performant post-deployment, reducing hallucination risks by up to 30% through rigorous evaluation loops.

Skill 3: Scalable RAG Pipeline Engineering

Scalable RAG (Retrieval-Augmented Generation) is more than just a vector search; it involves chunking strategies, embedding optimization, and query routing for accuracy at scale. An authority in this space uses parallel processing to handle millions of documents without latency spikes. This software skill positions you as the architect who delivers context-aware AI that outperforms basic prompting.

Skill 4: AI Security and Secure Model Deployment

AI security involves protecting against prompt injection, data poisoning, and adversarial attacks. Following NIST 2026 guidelines, authorities implement secure training pipelines and continuous scanning. Mastering this software skill creates a professional “moat,” as you enforce the compliance standards that protect company IP and avoid breaches that can cost millions.

Skill 5: Agentic Workflow Orchestration

Agentic workflows build multi-agent systems that reason, plan, and execute tasks autonomously using frameworks like LangGraph or CrewAI. This software skill allows you to automate complex DevOps pipelines where agents collaborate via feedback loops. Authority comes from the ability to design systems that “self-correct,” freeing human teams for high-level strategic decisions.

Skill 6: Vector Database Management and Optimization

Vector databases like Pinecone, Weaviate, or Milvus are the “long-term memory” of AI. Expertise in this software skill includes sharding, replication, and index tuning (e.g., HNSW vs. IVF). Optimization ensures high availability and query speed under heavy loads, allowing you to influence the foundational data architecture of major AI projects.

Skill 7: Containerization and Orchestration (Docker/K8s)

Docker containerizes AI models for portability, while Kubernetes orchestrates them across clusters. This software skill enables blue-green deployments and auto-scaling for inference endpoints. In team settings, it ensures zero-downtime updates, positioning you as the technical reliability lead who can handle millions of concurrent users.

Skill 8: CI/CD Pipelines for AI Workflows

AI-specific CI/CD integrates model testing and automated deployment. Using tools like GitHub Actions, authorities build pipelines that validate embeddings and security scans before code ever reaches production. This software skill drives massive efficiency, often cutting deployment cycles from weeks to hours.

Skill 9: Monitoring and Observability for AI Systems

Observability tracks metrics like latency, drift, and output quality using tools like Prometheus or Arize. Proactive alerting detects issues in agent behaviors before they affect users. Mastering this software skill allows you to maintain 99.9% uptime, providing the data-driven justification for major infrastructure investments.

Software Skills Comparison Matrix

This comparison matrix serves as a quick-reference guide for the “Software Skills” that convert general AI knowledge into professional engineering authority.

| Software Skill | Focus Area | Authority Outcome | Key Tools (2026 Standards) |

| Async Python | Concurrency | Handles 10k+ requests/sec with minimal overhead. | asyncio, aiohttp, FastAPI |

| LLMOps | Model Lifecycle | Reduces model drift by 30% through continuous eval. | LangSmith, Weights & Biases, MLflow |

| Scalable RAG | Retrieval | Scales to millions of docs without latency spikes. | Pinecone, Weaviate, GraphRAG |

| AI Security | Protection | Prevents prompt injection and secures company IP. | NIST 8596, Lakera, Giskard |

| Agentic Workflows | Autonomy | Automates complex DevOps and reasoning tasks. | LangGraph, CrewAI, AutoGPT |

| Vector DB Mgmt | Storage | Ensures sub-millisecond semantic search at scale. | Milvus, ChromaDB, Redis VL |

| Docker/K8s | Deployment | Achieves zero-downtime scaling for inference. | Kubernetes, Docker, KServe |

| CI/CD for AI | Automation | Cuts deployment cycles from weeks to hours. | GitHub Actions, CircleCI, ArgoCD |

| Monitoring | Reliability | Maintains 99.9% uptime for probabilistic systems. | Prometheus, Grafana, Arize Phoenix |

Key 2026 Update: The NIST Authority

A major addition for 2026 is Skill 4: AI Security. Following the recently finalized NIST 8596 (Cyber AI Profile), an “AI Authority” is no longer just someone who can build a model, but someone who can secure it. Authorities are now expected to:

- Inventory AI assets: Mapping every API, model version, and data flow.

- Implement “Control Overlays”: Using specific software safeguards to mitigate “creative” LLM failures.

- Thwart AI-enabled threats: Building resilience against deepfake-based social engineering and automated malware.

The transition from AI Talent to AI Authority is a move from experimental to operational. While talent builds the engine, authority builds the entire vehicle—ensuring it is fast (Async), fueled (RAG), steered (Agents), and armored (Security).

By mastering these 9 software skills, you move beyond the “prompting” layer and into the architectural tier where the most impactful (and high-paying) AI decisions are made.

How do these software skills create a career moat?

In 2026, basic AI literacy has become “table stakes”—nearly everyone can write a prompt or generate a script. To build a true career moat, you must move beyond the model and master the software skill set that surrounds it. These skills differentiate you by enabling the transition from experimental “AI Talent” to a professional “AI Authority” who can deliver ROI in production environments.

Avoiding the “Experimental Trap”

Most AI projects never leave the notebook stage. Technical leads in 2026 prioritize hiring professionals who can solve the “last mile” problem: production reliability. By mastering software skills like LLMOps and CI/CD for AI, you ensure that a model isn’t just a demo but a stable, revenue-generating service. Organizations now face an infrastructure crisis where over 50% of AI projects are delayed or canceled due to complexity; being the person who can simplify and stabilize that infrastructure makes you indispensable.

Protecting the Bottom Line

In the current economy, compute is the new oil. An AI Authority uses software skills such as Asynchronous Python and Usage-Based Inference Scaling to optimize resource consumption.

- The ROI Factor: Enterprises report that automated pipelines and optimized inference can save up to 50% on operational costs.

- The “Cost of Failure”: A single production failure or a security breach in a high-load system can cost an organization $50k+ in wasted compute and potential millions in IP loss. Mastering AI Security creates a moat because you aren’t just building features—you are protecting assets.

Moving from “Coder” to “Architect”

The 2026 job market has seen a “squeeze” on traditional junior roles. AI now handles routine bug fixes and basic documentation. Your software skills in Agentic Workflow Orchestration and Systems Thinking allow you to operate at a higher level. You aren’t just writing code; you are architecting “Software 2.0” systems that govern how multiple AI agents interact, reason, and self-correct.

The Authority Moat: While “AI Talent” is common, “AI Infrastructure Mastery” is rare. According to recent career audits, professionals with these integrated engineering skills command a 56% wage premium over those who only possess general AI knowledge.

What is the difference between MLOps and LLMOps?

While both disciplines share the goal of operationalizing models, the core difference lies in the starting point and the feedback loop. MLOps is designed for a world where you build the model from scratch; LLMOps is designed for a world where you specialize a pre-existing “giant.”

MLOps vs. LLMOps: Key Technical Differences

| Feature | MLOps (Traditional ML) | LLMOps (Generative AI) |

| Primary Task | Training models from scratch on structured data. | Fine-tuning and orchestrating pre-trained foundation models. |

| Data Type | Tabular, structured datasets (CSV, SQL). | Unstructured text, code, and multimodal embeddings. |

| Feedback Loop | Model Retraining: Changing weights based on new data. | RAG & Prompting: Updating context and retrieval pipelines. |

| The “Unit” of Work | Feature Engineering. | Prompt Engineering and Vector Embeddings. |

| Latency Focus | Inference speed on static data. | Token throughput and “Time to First Token.” |

Why the Distinction Matters for AI Authority

- From Training to Tuning: In MLOps, the software skill focus is on the training pipeline—collecting raw data, cleaning it, and running compute-intensive training jobs. In LLMOps, the focus shifts to the retrieval pipeline. An AI Authority knows that you rarely need to retrain the base model; instead, you build a robust RAG (Retrieval-Augmented Generation) architecture to feed the model the right information at the right time.

- The Evaluation Challenge: Traditional ML has clear metrics like Accuracy, Precision, or Mean Squared Error. LLMOps introduces “probabilistic uncertainty.” How do you measure if a summary is “good”?

- LLMOps Software Skill: Building automated “LLM-as-a-Judge” pipelines that use one model to grade the outputs of another, ensuring quality at scale.

- State Management: MLOps models are often stateless (input in, prediction out). LLMOps requires managing Conversation State and Memory. This requires a deeper software skill set in session management and vector database sharding to ensure the AI “remembers” the user across a long, complex workflow.

The Authority Insight: MLOps is about stability (the model stays the same until the next version). LLMOps is about agility (the model stays the same, but the prompts, data chunks, and system instructions evolve daily).

What are software skills in an AI context?

In the AI landscape of 2026, software skills encompass the engineering disciplines required to transition a model from a local notebook to a global production environment. This includes asynchronous programming for high-throughput APIs, containerization for portable deployments, and the orchestration of complex data pipelines. These skills ensure that AI systems are not just “smart,” but robust, scalable, and reliable.

Why prioritize LLMOps over basic Python?

While Python is the foundation, LLMOps is the professional superstructure. It manages challenges unique to Large Language Models—such as prompt versioning, cost-per-token optimization, and hallucination monitoring. Prioritizing LLMOps qualifies you for enterprise-grade roles where the goal is to maintain high-performance, context-aware systems at scale rather than just writing individual scripts.

How does RAG improve AI authority?

Retrieval-Augmented Generation (RAG) is a critical software skill because it solves the “knowledge cutoff” and hallucination problems. By engineering pipelines that retrieve fresh, external data (from vector databases or real-time APIs) before generating an answer, you create a system that is grounded in fact. This positions you as an authority who builds trustworthy, data-driven applications that outperform static, basic prompting.

What AI security practices are essential?

Essential practices align with the NIST 8596 (Cyber AI Profile) and NIST AI 600-1 standards. These include:

Adversarial Robustness Testing: Scanning for prompt injection and model tampering.

Data Provenance: Ensuring the training and retrieval data are secure and unpoisoned.

Least-Privilege Access: Restricting AI agents’ permissions to only the systems they absolutely need, preventing autonomous breaches.

When to use agentic workflows?

Agentic workflows should be used when a task requires multi-step reasoning, planning, and autonomous execution across different tools. They excel in complex, non-linear challenges like automated DevOps, research synthesis, or self-correcting data pipelines. An AI Authority uses frameworks like LangGraph or CrewAI to orchestrate these agents, ensuring they work collaboratively rather than in isolated loops.

In Conclusion

The transition from AI Talent to AI Authority is defined by the shift from probabilistic experimentation to deterministic engineering.

Asynchronous code ensures your systems handle real-world scale without collapse; LLMOps guarantees that your models remain reliable long after the initial deployment; and adherence to the latest NIST 8596 (Cyber AI Profile) standards builds the institutional trust necessary for enterprise-wide adoption.

In the 2026 landscape, authority isn’t just about what you can build—it’s about what you can stabilize, scale, and secure.

Your “First Step” Audit

To begin your journey toward AI Authority, start with a high-impact, low-risk audit of a single existing pipeline:

- Containerize with Docker: Wrap your current AI service in a Docker container to ensure environment parity and portability.

- Implement Basic Monitoring: Integrate a tool like Prometheus or Arize Phoenix to track “Time to First Token” and response drift.

- Run a NIST-Aligned Scan: Use a security scanner (like Lakera or Giskard) to check for basic prompt injection vulnerabilities.

- Blockchain Integration Frameworks to Scale Enterprise Trust - February 3, 2026

- 9 AI Certifications for Career Switchers from STEM Fields - February 3, 2026

- Top 9 AI Trading Platforms for Crypto vs. Stock Trading - February 3, 2026

Discover more from SkillDential | Your Path to High-Level AI Career Skills

Subscribe to get the latest posts sent to your email.